Murf AI is a popular AI voice platform used for marketing videos, explainers, and digital content. Teams evaluating it often examine pricing, voice quality, licensing terms, voice cloning controls, and workflow fit.

Learning and development teams look for narration that holds attention across long-form training. Marketing teams focus on speed and brand consistency. Agencies prioritize collaboration and client-safe licensing. Each group evaluates Murf AI through a different operational lens.

This guide reviews leading Murf AI alternatives and competitors based on voice quality, licensing clarity, workflow fit, multilingual support, and enterprise readiness. Use it to narrow your shortlist based on how your team actually produces content.

TL;DR: Murf AI alternatives & competitors (comparison snapshot)

- Teams explore Murf AI alternatives when voiceover must support large training libraries, frequent updates, or regulated environments.

- Key differences across Murf AI competitors appear in voice consistency, licensing clarity, governance, accessibility support, and workflow fit — not just how a sample sounds in a demo.

- Some platforms focus on expressive voice cloning and creator-driven projects. Others emphasize structured voice libraries built for repeatable production across training, compliance, and global communication.

- Voice cloning versus licensed voice libraries often becomes the deciding factor for L&D and enterprise teams managing long-term reuse and brand risk.

- Murf AI works well for marketing and general voiceover production. Other tools may align more closely with enterprise training, multilingual rollouts, or infrastructure-level speech synthesis.

- WellSaid fits teams that need licensed, consistent AI voices designed for ongoing learning and communication workflows.

Why teams look for Murf AI alternatives

Teams rarely search for alternatives without a reason. They want more control over audio generation, clearer licensing, or stronger workflow alignment as AI voice tools move from experimentation to production.

Common reasons teams evaluate other platforms:

- AI voices sound synthetic or drift toward robotic voices during extended training or compliance modules

- Limited control over pronunciation, pacing, emphasis, or voice profiles tied to a specific voice model

- Commercial licensing terms feel unclear for external distribution, YouTube videos, or client-facing assets

- Voice cloning technology raises brand safety and governance questions

- Pricing scales quickly as minutes, users, and audio generation increase

- Limited visibility into SOC 2 or GDPR posture

- Workflow friction when managing large content libraries or content localization across regions

- A voice library that lacks the consistency needed across programs, video translation projects, or global rollouts

Voice synthesis tools vary widely in quality and governance. Some emphasize voice changers, AI Avatar features, and flexible voice cloning technology for creative work. Others focus on stable text-to-speechh technology built for structured production environments. Understanding that difference helps teams choose the right fit for training, marketing, YouTube videos, or large-scale content localization.

How we evaluated Murf alternatives

Most teams comparing Murf AI alternatives already have content in production. Courses are live. Videos are published. Stakeholders review scripts and audio as part of normal operations.

This evaluation examined how each platform performs once voice becomes embedded in daily workflows. Quick demos and isolated samples were not the focus. The review assessed how well each tool supports real production environments where scale, reuse, and governance matter.

Each Murf AI competitor was evaluated across common L&D, marketing, and enterprise workflows, including content updates, multilingual rollouts, voice library management, and version control.

Evaluation criteria

- Voice quality and long-form consistency: Naturalness over extended listening carried significant weight. Many teams use AI voice generation to produce 20–30 minute training modules, onboarding series, or product walkthroughs. Stable pacing, predictable pronunciation, strong audio quality, and consistent tone across regenerations matter more than how a short AI voice synthesis sample sounds in isolation.

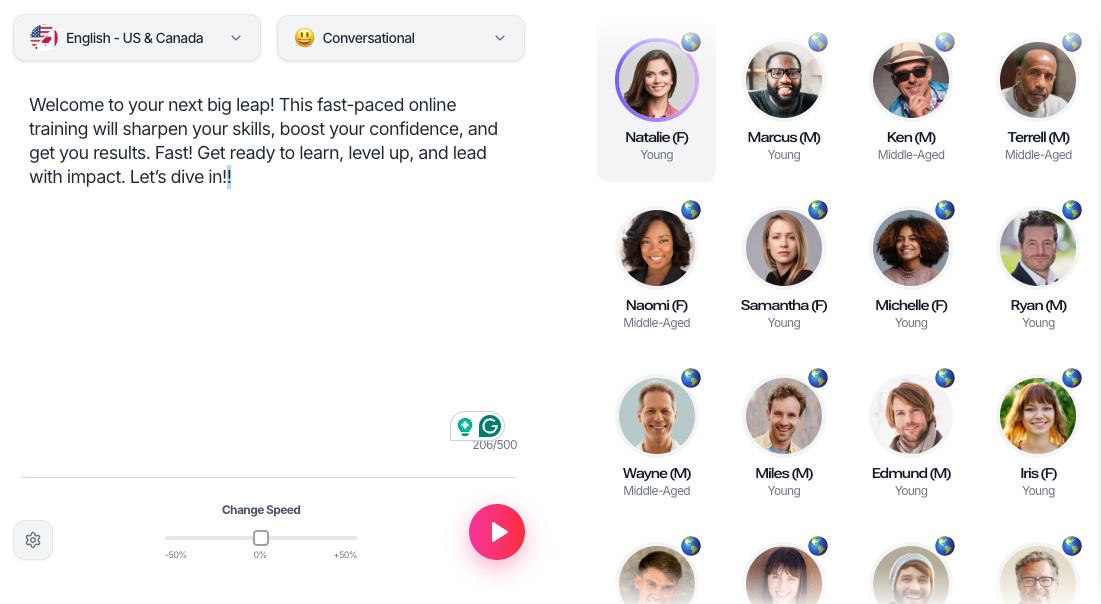

- Voice libraries versus voice cloning: Some platforms emphasize voice cloning and deep customization options. Others focus on curated voice libraries built from licensed actors. The evaluation considered how each AI voice synthesis model affects approvals, reuse, and long-term maintenance. Teams concerned with brand safety, repeatability, and emotion-enhancing features often scrutinize this decision point.

- Licensing clarity and commercial rights: Clear commercial usage terms reduce risk when audio content remains live for years or appears in customer-facing programs. The review assessed how directly each platform defines ownership, reuse rights, and external distribution for AI voice generation output.

- Security and compliance posture: Enterprise readiness requires documented security and privacy practices. SOC 2 alignment, GDPR support, defined data retention policies, and audit controls were part of the evaluation. Procurement and security teams need answers before AI voice synthesis becomes standard inside an organization, especially when speech recognition or automated workflows are involved.

- Accessibility and caption alignment: Teams frequently pair audio content with captions and on-screen elements. The review looked at support for caption file exports, subtitle generator capabilities, predictable pronunciation, and stable timing to support accessibility workflows.

- Workflow fit for content teams: Content owners need to edit scripts, regenerate audio, and manage versions without relying on engineering resources. The evaluation assessed how easily teams use each voiceover tool to update small sections, scale audio content across regions, and prevent voice production from becoming a recurring bottleneck.

- Pricing transparency as usage scales: Audio libraries expand quickly as AI voice generation adoption grows. The review looked beyond entry-level pricing and examined how costs scale with minutes, users, languages, and customization options.

This comparison prioritizes fit over ranking. Different Murf AI competitors serve different use cases across AI voice generation and audio content production. The sections that follow outline where Murf AI excels and where teams often seek alternatives as production demands grow.

Murf AI: What it is and is not

Many teams start with Murf AI because they want to produce voiceovers quickly. The platform supports AI voice generation with a broad set of AI voices and tools suited for marketing videos, explainers, and general digital content experiences. For early-stage projects or lightweight production needs, that speed can be appealing.

As voice becomes part of a larger system, expectations shift. Ongoing training programs, multilingual rollouts, and regulated environments introduce new requirements around governance, licensing clarity, workflow control, and consistent audio quality across AI voice synthesis outputs.

Where Murf AI performs well

- Marketing and short-form content production: Murf AI supports marketing teams creating product videos, social clips, and internal communications. Its voice library and customization options cover a range of styles that work well for shorter video content and campaign-driven audio content.

- Quick turnaround for general voiceover needs: Teams producing explainer videos or promotional assets can use this voiceover tool to generate audio quickly without complex setup. For organizations testing AI voice generation for the first time, this lowers the barrier to entry.

Where teams raise concerns

- Long-form consistency in training and compliance content: Voices that perform well in short segments may require closer review in extended modules. L&D teams often look for steady pacing, reliable pronunciation, and stable audio quality across 20-minute lessons or longer AI voice synthesis sessions.

- Clarity around licensing and reuse: When audio content appears in customer-facing programs or remains live for years, buyers need clear commercial rights and defined usage terms for AI voice generation. Questions around reuse and redistribution can surface during procurement or legal review.

- Voice cloning and brand governance: Voice cloning expands customization options and emotion-enhancing features. It also raises questions about consent, ownership, and long-term availability. Enterprise teams often evaluate how cloning aligns with internal brand standards and compliance policies before adopting it widely.

- Enterprise security and audit requirements: Security and procurement teams review data handling, compliance documentation, and access controls before approving AI voice synthesis platforms for large-scale deployment. Limited visibility into these areas, especially where speech recognition or automated integrations are involved, can slow adoption in regulated industries.

These factors explain why many teams evaluate Murf AI alternatives once AI voice generation becomes embedded in structured production environments. The following sections explore how other platforms address these same pressures as audio content scales across programs and regions.

The top Murf AI alternatives & competitors

Short demos rarely reveal how a platform performs once voice becomes part of ongoing production. The comparison below highlights how Murf AI and leading alternatives differ across voice quality, licensing clarity, multilingual support, and enterprise readiness.

Comparison table: Murf AI vs leading alternatives

*G2 ratings reflect publicly listed scores at time of writing and may change.

1. WellSaid

Best for: Learning & development teams, regulated industries, and enterprise marketing teams that need licensed, studio-quality AI voices.

WellSaid supports teams who create content that teaches, guides, and informs. Voice becomes a repeatable production layer across onboarding, compliance, enablement, customer education, and internal communications.

The platform focuses on:

- Licensed, rights-cleared voice actors

- Stable, long-form listening quality

- Clear commercial usage rights

- Enterprise governance and auditability

Teams choose WellSaid when audio must stay consistent across large libraries and long timeframes. Updates happen frequently. Multiple stakeholders review scripts. Content remains live for years. The workflow supports that reality.

Key features

- Studio-quality AI voices created from licensed professional voice talent

- Closed-source platform with governed AI

- SOC 2 and GDPR compliance documentation

- Clear commercial licensing designed for long-lived and external content

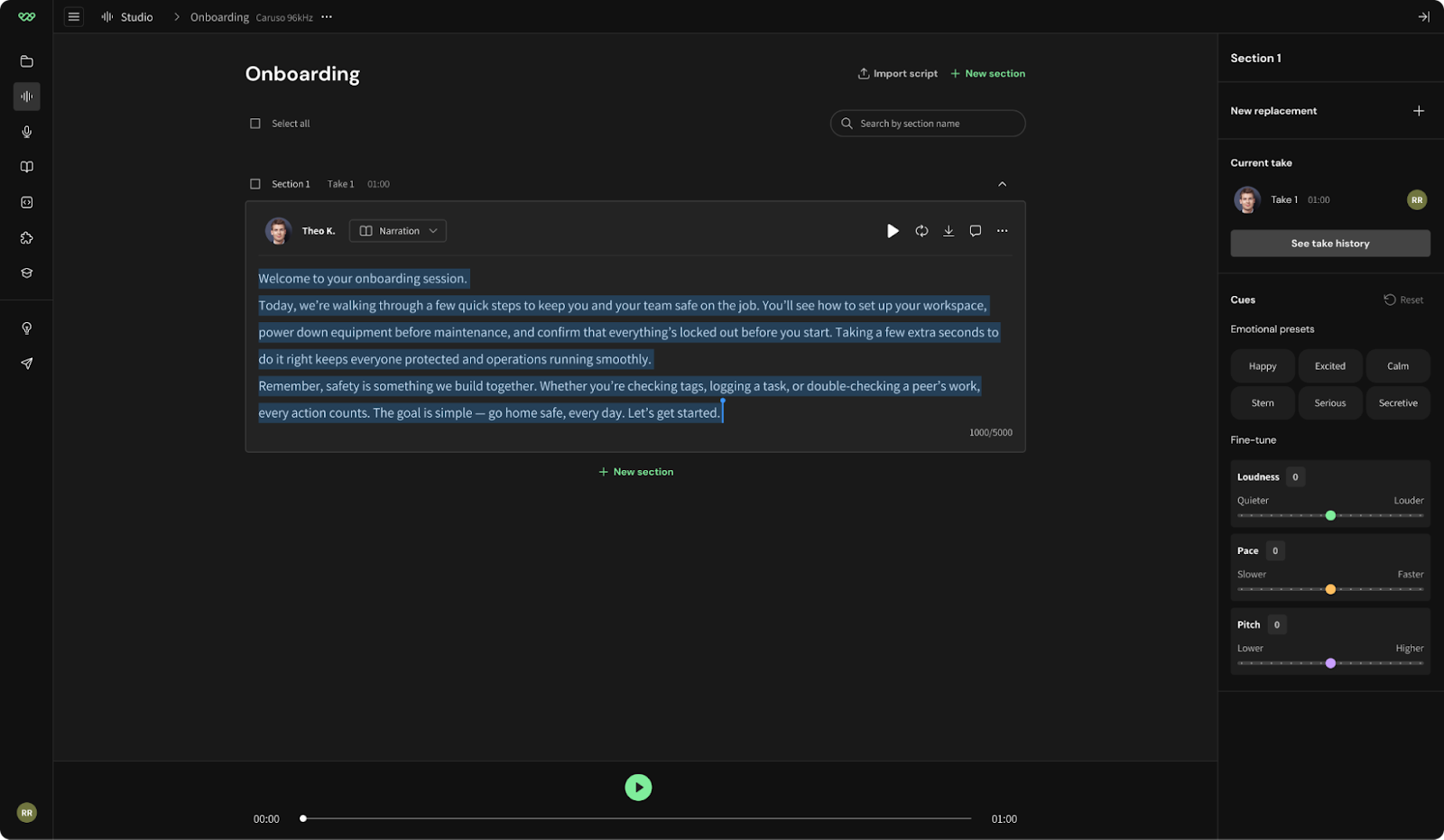

- Built-in SRT and VTT export for accessibility alignment

- Multilingual consistency across programs and regions

- Workflow-ready for LMS platforms and authoring tools

- API for developers that supports controlled integration into existing systems

- Emotion customization and personalization using Cues

What licensing clarity looks like in practice

A training program launches internally and later expands to partners and customers. The same audio remains in use without renegotiation or replacement. Clear commercial rights remove ambiguity during procurement and distribution.

Workflow example

A policy update affects multiple compliance modules across regions. The team edits the script, regenerates the audio, and re-exports captions in the same session. Production completes within hours. The voice remains consistent across every affected course.

Where it differs from Murf AI

- Built for structured learning and communication workflows

- Stronger enterprise governance and documented compliance posture

- No open voice cloning marketplace

- Prioritizes consistency, voice accuracy, and long-term reuse

- Use cases span across industries

Considerations

- Not designed for novelty or end-user voice cloning experimentation

- Less flexibility for highly customized or experimental voice creation projects

2. ElevenLabs

Best for: Expressive voice cloning and creator-driven projects.

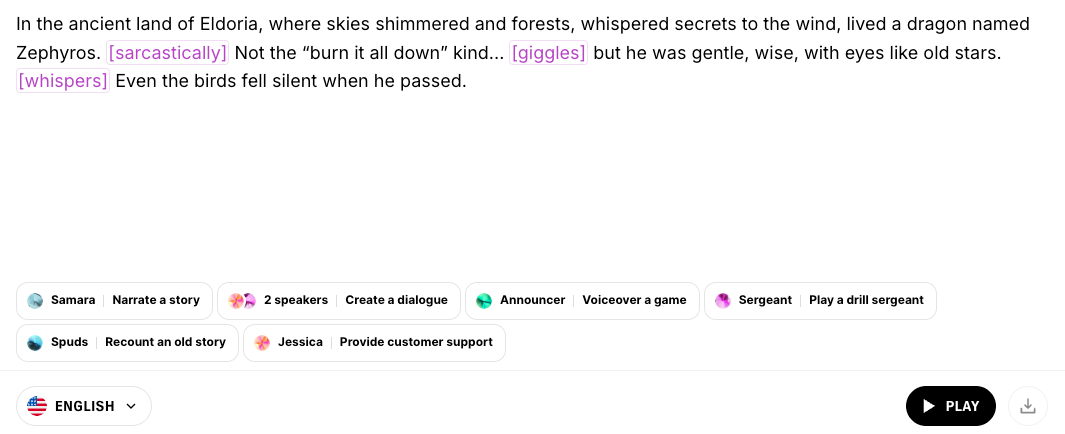

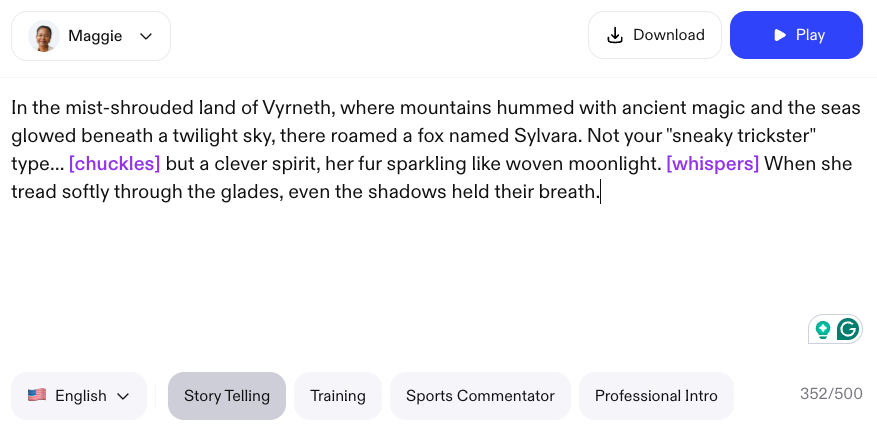

ElevenLabs centers its platform around expressive voice synthesis and advanced voice cloning. The technology attracts creators working in narrative formats where tone, character, and emotional range shape the experience.

Common users include:

- Content creators producing short-form and long-form media

- YouTube producers building narration-driven channels

- Audiobook narrators and indie publishers

- Developers experimenting with custom AI voices for apps and interactive projects

The platform emphasizes flexibility and experimentation, particularly in voice cloning and character-driven audio.

Key features

- Advanced voice cloning tools for custom or character voices

- Highly expressive delivery with strong emotional range

- Expanding voice library across languages and styles

- API access for experimentation, prototyping, and integration

ElevenLabs often appeals to teams focused on storytelling, entertainment, and creator-led content where vocal nuance drives engagement.

Considerations

- Licensing varies by plan and intended usage

- Voice cloning introduces questions around consent, ownership, and IP exposure

- Compliance documentation for business deployment may require review

- Less specialized for structured L&D workflows, caption alignment, and regulated training environments

Teams evaluating ElevenLabs for business use typically review licensing terms and governance posture before scaling production across large programs.

3. Speechify

Best for: Consumer text-to-speech and content reading.

Speechify focuses on converting written text into audio for listening. The platform serves users who want to consume articles, documents, or study materials in audio format.

Typical users include:

- Students listening to coursework

- Professionals reviewing reports or long documents

- Accessibility users who prefer audio playback

Speechify operates primarily as a listening tool rather than a production voiceover platform.

Key features

- Browser extensions and mobile apps for easy playback

- Natural-sounding AI voices optimized for readability

- Strong consumer adoption and brand recognition

- Simple text-to-audio conversion for quick listening

Considerations

- Designed for text-to-speech consumption, not structured content production

- Limited support for managing large content libraries or recurring updates

- Not built for multi-author training workflows

- Licensing is primarily aligned with personal or individual use

Teams comparing Speechify to Murf AI or other production platforms usually determine quickly whether they need a listening tool or a repeatable voiceover system.

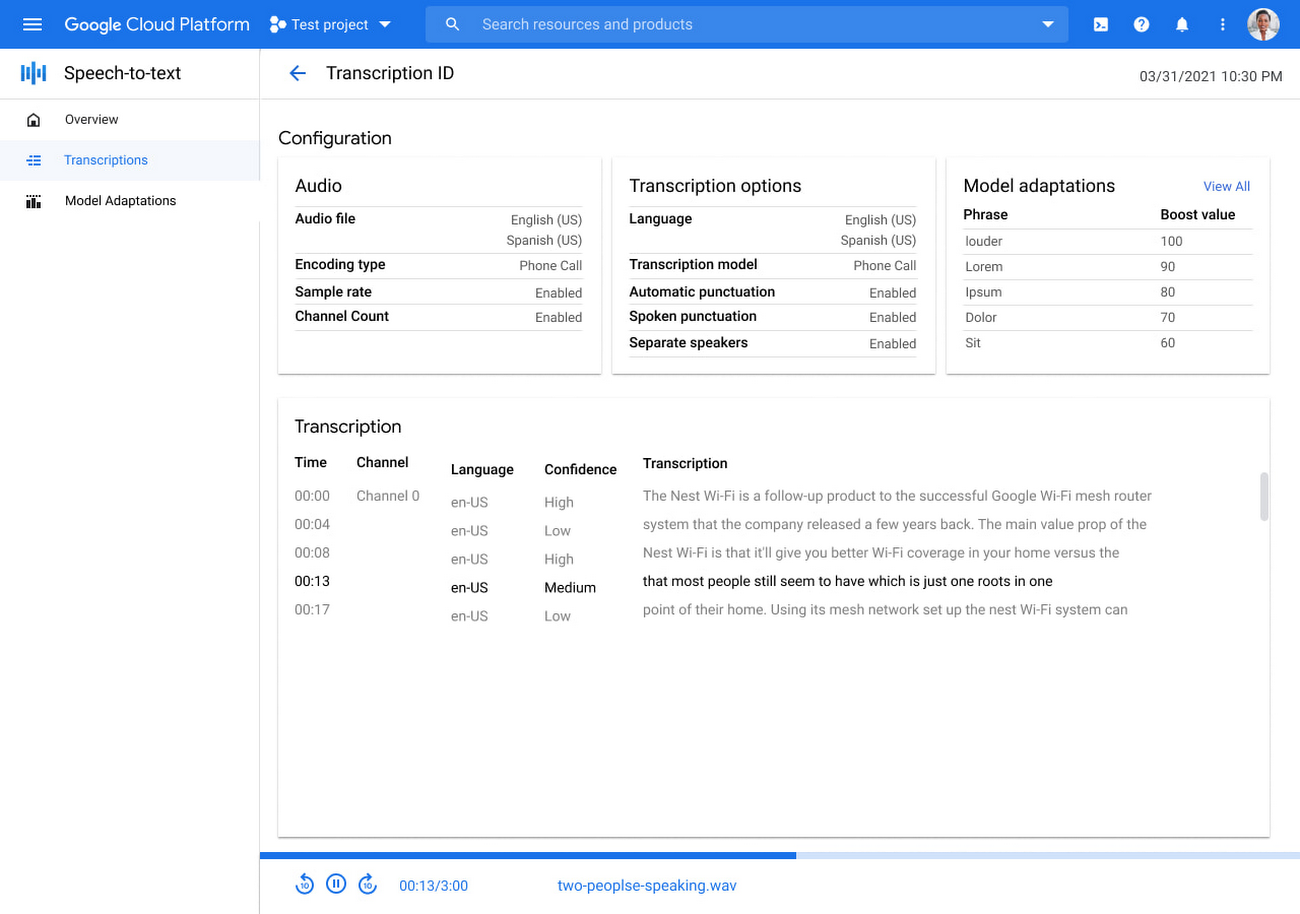

4. Google Cloud Text-to-Speech

Best for: Developer-driven applications and scalable speech synthesis.

Google Cloud Text-to-Speech is part of Google Cloud’s AI portfolio. It provides programmatic voice synthesis for applications, digital products, and automated systems.

Common use cases include:

- Embedding speech into apps or software platforms

- Powering IVR systems and automated customer and digital interactions

- Supporting high-volume speech synthesis at a global scale

The service functions as infrastructure rather than a complete production environment for content teams.

Key features

- Broad language and locale support

- API-based deployment within Google Cloud environments

- Scalable infrastructure for high-volume output

- Integration with other Google Cloud services

Organizations with engineering resources can integrate Google TTS into custom systems and workflows.

Considerations

- Requires developer resources for setup, maintenance, and updates

- Voice quality varies across models and configurations

- Not purpose-built for training, onboarding, or content production workflows

- Day-to-day voice management depends heavily on engineering support

- Licensing and cost structure depend on usage volume and cloud configuration

Teams evaluating build-versus-buy decisions often consider Google Cloud Text-to-Speech when they prioritize infrastructure control and global scalability. Training and marketing teams without dedicated engineering support may find additional operational complexity.

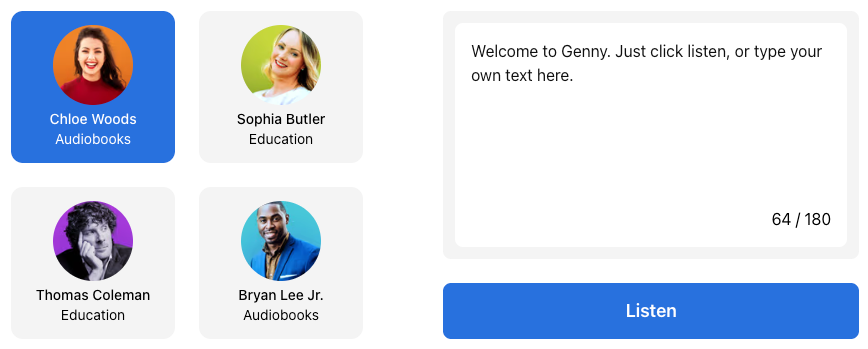

5. LOVO AI

Best for: Marketing teams and video content creators.

LOVO AI centers its platform on creative voice production for video, ads, and social content. Marketing teams often evaluate LOVO AI when tone variation and stylistic flexibility matter for campaign work.

Typical users include:

- Creative teams producing brand videos and explainers

- Social media managers creating short-form content

- Agencies building campaign assets across clients

- Content creators focused on YouTube or promotional media

The platform supports rapid voiceover production aligned to marketing timelines.

Key features

- Broad voice library with varied tones and styles

- Voice cloning capabilities for custom delivery

- Video-focused tools that support campaign workflows

- Accessible interface for content creators and marketing teams

LOVO AI often fits environments where expressive delivery and creative control drive the buying decision.

Considerations

- Commercial rights vary by plan and require review for external distribution

- Governance and compliance features are more limited compared to enterprise-focused platforms

- Maintaining consistent voice across long-form or frequently updated training content can require added coordination

- Less optimized for structured L&D workflows or compliance-heavy programs

Teams producing marketing assets at scale may find LOVO AI aligns well with campaign-based production. Organizations building large training libraries often review workflow depth and licensing posture more closely.

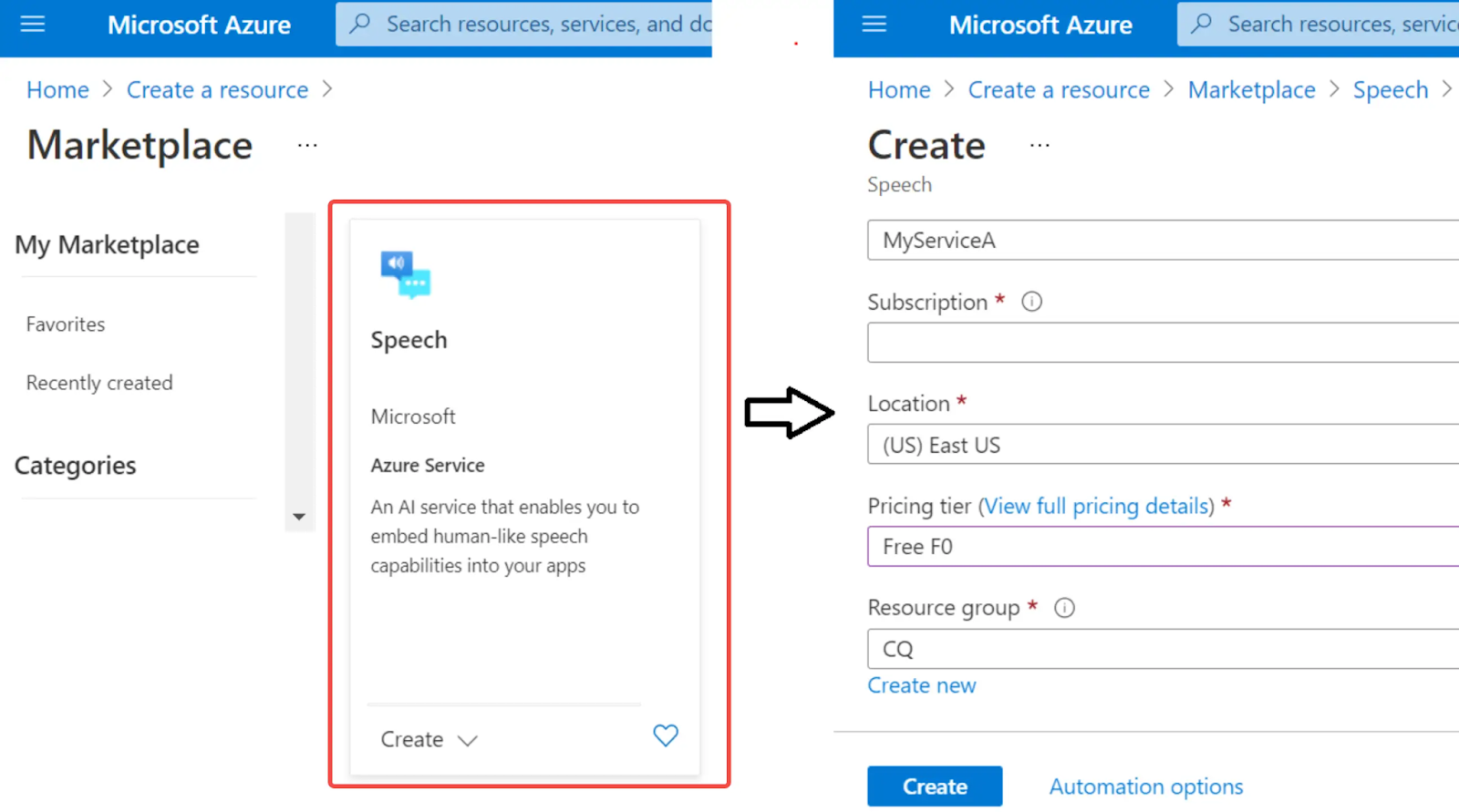

6. Microsoft Azure Text to Speech

Best for: Enterprise IT teams and application-level integrations.

Microsoft Azure Text-to-Speech operates within Azure Cognitive Services. It supports speech synthesis at the infrastructure level for applications, IVR systems, and global digital products.

Organizations typically evaluate Azure TTS when they already use Microsoft Azure across identity, cloud hosting, and compliance frameworks.

Common use cases include:

- Embedding voice into enterprise software

- Powering IVR and automated service systems

- Supporting large-scale speech synthesis across regions

Azure TTS functions as part of a broader cloud environment rather than a standalone production tool for content teams.

Key features

- Alignment with Azure security, identity, and compliance standards

- Broad multilingual support across global markets

- Neural voice options designed for scalable output

- Strong API ecosystem within Microsoft’s cloud infrastructure

Enterprise IT teams often value centralized governance and integration across existing Azure deployments.

Considerations

- Requires technical implementation and ongoing engineering involvement

- Built primarily for developers and infrastructure teams

- Voice production workflows must be designed and maintained internally

- Less suited to rapid, self-serve updates by L&D or marketing teams

Azure Text-to-Speech often meets procurement and security requirements. Daily voice production for training, onboarding, or marketing content typically depends on internal development resources.

Murf AI vs competitors: how to decide

After reviewing feature sets, most teams narrow their shortlist quickly. The final decision centers on operational fit: how well a platform supports daily production once AI voice synthesis becomes embedded in workflows.

The focus shifts from demo samples to practical questions. Who owns updates? How often does content change? What level of governance applies to AI voices used in training, marketing, YouTube videos, or client-facing video translation projects? The answers guide the decision.

Use the framework below to evaluate Murf AI competitors against your environment.

For learning & development teams

Learning teams treat voice as part of an ongoing system. Courses evolve. Policies change. Content remains live for years.

Prioritize:

- Licensing clarity: Confirm clear commercial rights for internal and external training, including content localization and regional rollouts. Review reuse terms tied to specific voice profiles or voice models.

- Long-form listening quality: Evaluate voice consistency across 20–30 minute modules. Stable pacing and natural delivery reduce listener fatigue and avoid robotic voices in extended AI voice synthesis sessions.

- Accessibility exports: Look for reliable SRT or VTT export to keep voice, captions, and on-screen content aligned across multilingual and video translation workflows.

- Compliance posture (SOC 2, GDPR): Review documented security and privacy practices before scaling AI voice tools across departments, especially when speech data or speech recognition integrations are involved.

When AI voices support structured training libraries, governance ,and repeatability carry more weight than experimentation with voice changers or AI Avatar features.

For marketing & content creators

Marketing teams move quickly. Campaign timelines compress. Video content production rarely slows down.

Prioritize:

- Speed of production: Generate and update audio without complex setup or external recording cycles. Efficient audio generation supports fast iteration on product videos and YouTube videos.

- Brand voice consistency: Select a voice model and defined voice profiles that align with brand tone and remain consistent across product videos, explainers, and launch assets.

Creative workflow alignment: Confirm compatibility with video and design tools, including workflows that incorporate AI Avatar formats or content localization for global audiences. - Commercial rights for distribution: Validate usage terms for paid ads, customer-facing video content, YouTube videos, and cross-channel campaigns.

When AI voice becomes part of recurring campaign production, text-to-speech technology must support creative speed without sacrificing audio quality.

For agencies

Agencies manage multiple clients, brands, and approval cycles. Voice decisions affect both creative output and contractual risk.

Prioritize:

- Voice variety: Access a broad voice library with distinct voice profiles and voice models that support different industries and brand styles, without relying solely on voice cloning technology.

- Collaboration controls: Enable shared access, version management, and structured review across internal teams and client stakeholders.

- Scalable pricing: Review how costs grow as client audio generation expands across campaigns, video translation, and content localization efforts.

- Client-safe licensing: Confirm commercial usage terms that cover external distribution, long-term reuse, and multi-market deployment.

Agencies benefit from platforms that deliver predictable AI voice synthesis across projects while protecting client relationships and brand standards.

A practical test

Ask these questions during evaluation:

- Who updates scripts and regenerates audio?

- How frequently does content change?

- Where does audio appear — internal training, public YouTube videos, AI Avatar experiences, or both?

- Does the roadmap include video translation or content localization?

- What level of compliance review applies?

- How large will the voice library and set of voice profiles become over time?

These answers clarify which Murf AI competitors align with real production demands. Audio quality, governance, licensing clarity, and workflow fit determine how well AI voices perform once usage expands beyond simple text-to-speech technology into full-scale audio generation.

Pricing and “free alternatives”: what to know

Searches for Murf AI alternatives often include the word “free.” Those results can create confusion. Free tools typically solve a narrow need. Limits surface once voice becomes part of structured production or regulated distribution.

Is there a free alternative to Murf AI?

In most cases, “free” includes:

- Strict caps on minutes, exports, or available AI voices

- Restricted commercial usage rights

- Limited voice library access

- Fewer controls over pronunciation, pacing, or voice synthesis settings

- No commitments around long-term availability

Free tiers work for experimentation or personal use. Training, onboarding, and customer-facing video content usually outgrow those limits quickly.

Why pricing comparisons can be misleading

Entry-level pricing rarely reflects long-term production cost.

Two factors shape total investment:

Cost per finished minute: Generated audio represents only part of the equation. Review cycles, script revisions, caption updates, and version management add overhead.

Cost of change: Terminology or policy updates can affect dozens of modules. Platforms that support fast re-renders and caption exports limit disruption. Manual coordination increases time and labor.after

Pricing lens

Evaluate:

- How pricing scales as minutes, users, and languages grow

- Whether commercial rights cover long-term reuse

- How often content updates occur

- What level of review and governance applies

Clear licensing and stable voice synthesis reduce friction when usage expands. Predictable costs support planning as voice libraries grow.

Choosing the right Murf AI alternative

There is no universal AI voice platform that fits every team.

The right choice depends on:

- Licensing needs for internal and external distribution

- Expectations around long-form voice quality

- Workflow fit for updates and version control

- Enterprise compliance requirements

Teams producing training, onboarding, compliance, or high-volume communication content benefit from clarity and governance. Prioritize licensed AI voices, stable speech synthesis, and workflows that support frequent updates.

Select a platform that supports how your team works today and how it plans to scale tomorrow. Explore WellSaid Studio today.

FAQs

Is Murf AI better than ElevenLabs?

Murf AI often supports marketing videos and general business voiceover. ElevenLabs focuses heavily on expressive narration and voice cloning.

Teams producing storytelling-driven content may prioritize expressive range. Organizations building structured training or compliance programs typically examine licensing clarity and governance before selecting a platform.

Who are Murf AI’s biggest competitors?

Murf AI competes across several segments of the AI voice market:

- Enterprise voice platforms: WellSaid

- Voice cloning and creator-focused tools: ElevenLabsai vo

- Cloud infrastructure TTS providers: Google Cloud Text-to-Speech, Microsoft Azure Text-to-Speech

- Marketing-focused voice platforms: LOVO AI

- Consumer text-to-speech tools: Speechify

The competitive landscape spans content teams, developers, agencies, and accessibility users. The right category depends on how voice synthesis fits into your workflow.

What is the difference between Murf AI and WellSaid Labs?

Murf AI targets general voiceover use cases such as marketing videos and digital content. WellSaid focuses on structured learning, training, and enterprise communication.

Key distinctions often include:

- Licensed, rights-cleared voice actors versus broader AI voice sourcing models

- Documented enterprise compliance posture

- Workflow support for frequent script updates and caption exports

- Governance controls designed for large content libraries

Teams producing long-term training programs or regulated material often review these areas closely during evaluation.

Is Murf AI worth it?

Murf AI can deliver value for teams producing marketing content, explainers, or internal announcements.

Value depends on scope and scale. Consider:

- How often content updates occur

- Whether audio appears in customer-facing or regulated environments

- How large the voice library will become

- What level of compliance review applies

Assessing these factors clarifies whether Murf AI aligns with long-term production needs.

Can you use Murf AI for commercial projects?

Commercial usage typically depends on the subscription tier and usage terms. Review licensing language carefully before publishing audio in paid ads, customer education programs, or distributed video content.

Confirm rights for long-term reuse, redistribution, and international deployment if your content remains live for years.

Does Murf AI support multilingual content?

Murf AI supports multiple languages. Multilingual capability varies in depth across platforms, including available voices and pronunciation control.

Teams planning global rollouts often evaluate:

- Voice consistency across languages

- Caption export capabilities

- Workflow efficiency for updating multiple regions simultaneously

Global training programs require more than language coverage alone. They require consistent tone and repeatable production standards.

What should enterprises look for in an AI voice platform?

Enterprise teams typically review:

- Clear commercial licensing terms

- Documented security and privacy standards

- Auditability and access controls

- Long-form listening quality

- Accessibility support

- Predictable pricing as usage grows

Voice becomes part of the business infrastructure once adoption expands. Treat evaluation accordingly.

Is voice cloning safe for business use?

Voice cloning introduces flexibility and creative control. It also raises questions around consent, intellectual property, and long-term availability of cloned voices.

Organizations with defined brand governance or compliance requirements often establish internal policies before adopting cloning capabilities. Review licensing, data handling, and voice ownership terms carefully before scaling cloned voices across public-facing content.

Do Murf AI alternatives support natural language understanding and distinct vocal identities for elearning videos?

Some Murf AI alternatives incorporate natural language understanding to improve pronunciation and context handling during AI voice synthesis. Platforms built for structured elearning videos also provide consistent vocal identities and a video-native workflow, which helps teams update scripts and regenerate audio without disrupting production.

.jpg)

.jpg)

.jpg)

.jpg)